Image: Dia Dipasupil/Wire Images via Getty

If you haven’t heard already, SpaceX CEO Elon Musk is dating the electronic musician Grimes, a match that’s about as unlikely as Musk’s timeline for settling Mars.Before the couple—henceforth known as ‘ grusk’— made their first public appearance together at the MET Gala on Monday, Musk tweeted what would have otherwise been yet another unremarkable dad joke on the billionaire’s timeline. The tweet read “Rococo’s Basilisk,” a play on words that mixes the name for an 18th century baroque art style (Rococo) with the name of an internet thought experiment about artificial intelligence known as ‘Roko’s Basilisk.’According to Page Six, which broke the news of grusk, it was this non-joke that sparked Musk and Grimes’ relationship. Apparently Musk was going to tweet about ‘Rococo’s Basilisk’ a few weeks ago when he discovered that Grimes had made the same joke three years earlier and reached out to her about it.One can only speculate on the awkwardness of Musk sliding into your DMs with a “hey, we make similar jokes” pick up line, but it’s notable that an internet thought experiment has captured the minds of two people as different as a multi-platinum musician and a union-busting billionaire.So what’s up with Roko’s basilisk?The thought experiment was originally posted to Less Wrong—a forum and blog about rationality, psychology, and artificial intelligence, broadly speaking—in July 2010 by a user named Roko. At the most basic level, the thought experiment is about the conditions in which it would be rational for a future artificial superintelligence to kill the humans who didn’t help bring it into existence.The thought experiment is based on a theory first postulated by Less Wrong’s creator Eliezer Yudkowsky called coherent extrapolated volition, or CEV. The theory itself is pretty dense, but for the purposes of the Roko thought experiment, it can be treated as a hypothetical program that causes an artificial superintelligence to optimize its actions for human good. Yet if a superintelligence makes all its choices based on which one is best suited for achieving ‘human good,’ it will never stop pursuing that goal because things could always be a bit better. Since there’s no predefined way to achieve a goal as nebulous as ‘human good,’ the AI may end up making decisions that seem counterintuitive to that goal from a human perspective, such as killing all the humans that didn’t help bring it into existence as soon as possible. From the AI’s perspective, however, this route makes sense: If the goal is achieving “human good,” then the best action any of us could possibly be taking right now is working towards bringing a machine optimized to achieve that goal into existence. Anyone who isn’t pursuing that goal is impeding progress and should be eliminated in order to better achieve the goal.The reason Roko’s basilisk is so often cited as the “ most terrifying thought experiment of all time” is because now that you’ve read about it, you’re technically implicated in it. You no longer have an excuse to not help bring this superintelligent AI into existence and if you choose not to, you’ll be a prime target for the AI.This is also where the thought experiment gets its ‘basilisk’ name. There’s a great science fiction short story by David Langford about a type of image called “basilisks” that contain patterns that make them deadly to anyone who looks at them. In the story, a group goes around throwing these images up in public places in the hopes that they would kill unsuspecting passersby who happened to look at them.When Roko posted his basilisk theory to Less Wrong, it really pissed off Yudkowsky, who deleted the post and banned all discussion of the basilisk from the forum for five years. When Yudkowsky explained his actions in the Futurology subreddit a few years after the original post, he claimed that Roko’s thought experiment assumed it could overcome a number of technical obstacles in decision theory, and even if this was possible, Roko was effectively spreading a dangerous idea much like the villains in Langford’s story.“I was caught flatfooted in surprise because I was indignant to the point of genuine emotional shock at the concept that somebody who thought they'd invented a brilliant idea that would cause future AIs to torture people who had the thought [and] had promptly posted it to the public Internet,” Yudkowsky wrote to explain why he ‘yelled’ at Roko on the forum.

Since there’s no predefined way to achieve a goal as nebulous as ‘human good,’ the AI may end up making decisions that seem counterintuitive to that goal from a human perspective, such as killing all the humans that didn’t help bring it into existence as soon as possible. From the AI’s perspective, however, this route makes sense: If the goal is achieving “human good,” then the best action any of us could possibly be taking right now is working towards bringing a machine optimized to achieve that goal into existence. Anyone who isn’t pursuing that goal is impeding progress and should be eliminated in order to better achieve the goal.The reason Roko’s basilisk is so often cited as the “ most terrifying thought experiment of all time” is because now that you’ve read about it, you’re technically implicated in it. You no longer have an excuse to not help bring this superintelligent AI into existence and if you choose not to, you’ll be a prime target for the AI.This is also where the thought experiment gets its ‘basilisk’ name. There’s a great science fiction short story by David Langford about a type of image called “basilisks” that contain patterns that make them deadly to anyone who looks at them. In the story, a group goes around throwing these images up in public places in the hopes that they would kill unsuspecting passersby who happened to look at them.When Roko posted his basilisk theory to Less Wrong, it really pissed off Yudkowsky, who deleted the post and banned all discussion of the basilisk from the forum for five years. When Yudkowsky explained his actions in the Futurology subreddit a few years after the original post, he claimed that Roko’s thought experiment assumed it could overcome a number of technical obstacles in decision theory, and even if this was possible, Roko was effectively spreading a dangerous idea much like the villains in Langford’s story.“I was caught flatfooted in surprise because I was indignant to the point of genuine emotional shock at the concept that somebody who thought they'd invented a brilliant idea that would cause future AIs to torture people who had the thought [and] had promptly posted it to the public Internet,” Yudkowsky wrote to explain why he ‘yelled’ at Roko on the forum.

Advertisement

Advertisement

Advertisement

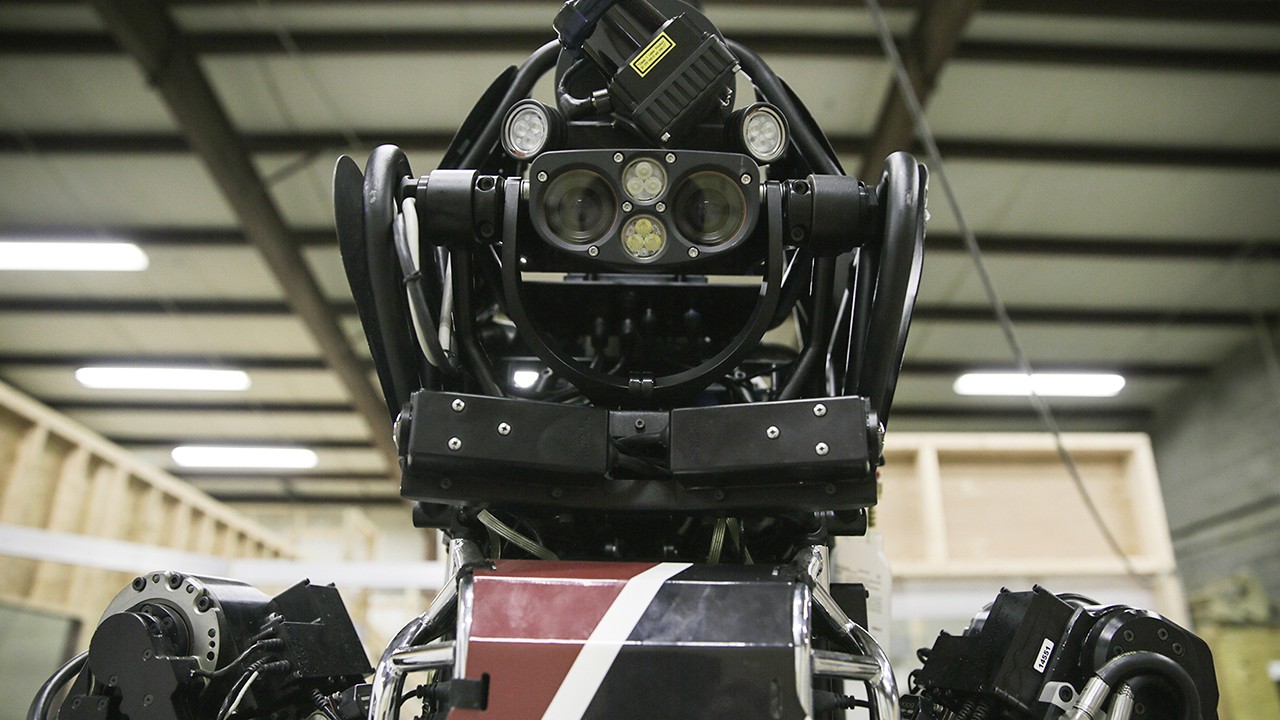

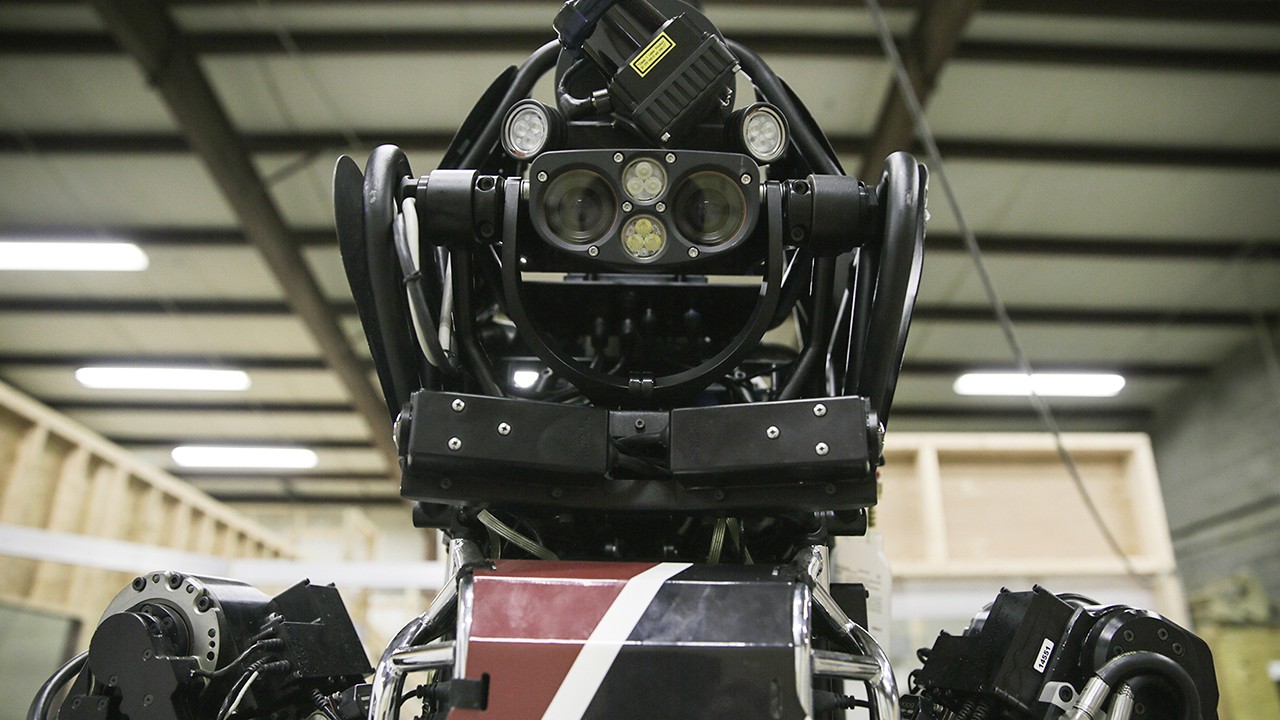

It’s no surprise that Musk finds Roko’s basilisk attractive. The darling of Silicon Valley has made his feelings about the dangers posed by superintelligent AI well known by creating Open AI, a nonprofit dedicated to pursuing ethical artificial intelligence, which he left shortly thereafter, as well as sponsoring Do You Trust this Computer, a less-than-stellar documentary about the threat of artificial intelligence.For now, anything approaching superintelligent AI remains a distant goal for researchers. Most AI today have a hard enough time telling a turtle apart from a gun, which means the biggest threats from machine learning are still pretty banal, such as getting hit by a self-driving car or having your face spliced into a porn movie. Nevertheless, serious threats from machine learning like autonomous weapons are on the horizon and the downsides of AI are worth taking seriously, even if they’re not quite as much of an existential threat as Roko’s basilisk.And hey, even if Roko turns out to be right, you can at least take solace in the fact that his thought experiment helped a billionaire find love.Read More: Top Researchers Write 100-Page Report Warning About AI Threat to Humanity