How the challenges of making fonts readable by machines influenced typography

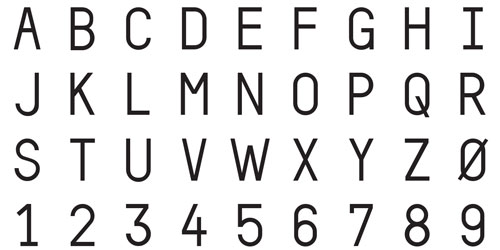

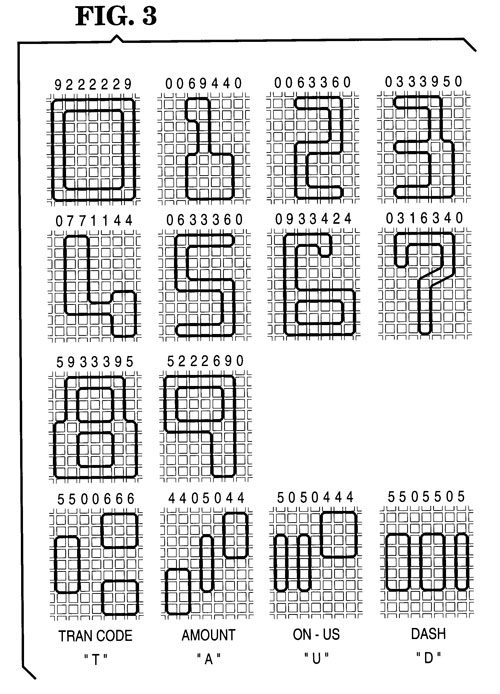

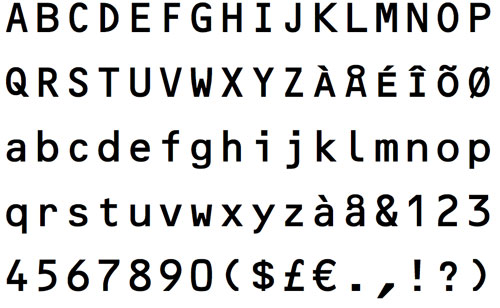

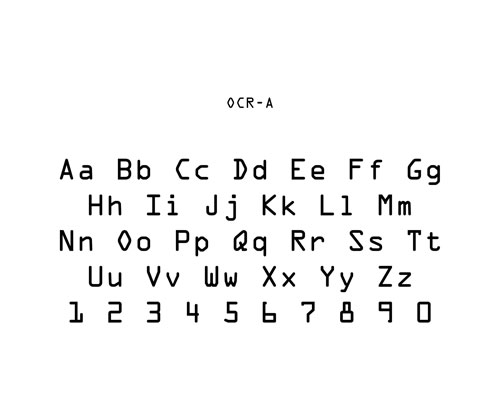

OCR-A wasn't the first font to tackle these machine-scanning issues, but it was a major step forward in that it was a complete alphabet that was readable by both machines and humans. Previously, the most well-known use for such technology involved something you're probably familiar with if you've cashed a check sometime in the last 60 years: Magnetic Ink Character Recognition, or MICR.Many of the typefaces used today are derived from medieval calligraphy, only slightly modified by the limitations of early printing technologies (wood blocks and moveable type). To imitate fine penmanship, vertical strokes are thick compared to horizontal strokes, and NW-SE diagonals are thicker than NE-SW diagonals. Therefore curved strokes may vary in width according to their local orientation. In contrast, typefaces specifically designed for accurate OCR, such as OCR-A and OCR-B fonts, have uniform stroke widths and exaggerated distinctions between similar symbols such as Ο and 0 or 1 and l.

— Technologist Ray Kurzweil, describing the chance encounter that gave a purpose the project that he was working on at the time. This led Kurzweil to build his company's technology around this specific use case, the result becoming the Kurzweil Reading Machine. The device could scan a given page, parse the text on the page, and recite it to the person who wanted to read the page. It was a definite upgrade from vinyl books, and it helped generate excitement about OCR technology in the mid-1970s."I happened to sit next to a blind gentleman on a plane flight, and he explained to me that the only real handicap that he experienced was his inability to read ordinary printed material. It was clear that his visual disability imparted no real handicap in either communicating or traveling. So I had found the problem we were searching for."

Five key points in the evolution of optical character recognition

- In 1952, onetime National Security Agency employee David H. Shepard started a company called Intelligent Machines Research Corporation to help commercialize a product that could read. "We built it in my attic," he told the Associated Press in 1954.

- Also in the 1950s, Jacob Rabinow, an employee of the National Institute of Standards and Technology, built a reading machine of his own, this one built on an approach called "best match," which detected the letter by comparing it to every character in the alphabet and suggesting an answer. "It enabled us to read very poor printing that could not be read by any other means," Rabinow recalled. It could only read one character a minute, but soon, it got much better.

- In 1965, the US Postal Service, which had since 1957 been using a sorting machine built by Rabinow, installed an OCR machine at the Detroit Post Office. The devices, according to a 1970 Post Office video, were expected to read at a speed of 42,000 addresses per hour.

- In 1980, Ray Kurzweil sold his company Kurzweil Computer Products to Xerox, which further developed his technology into something that proved valuable to the business world. The base company, later sold off by Xerox, still exists to this day under the name Nuance.

- In the early 1990s, handheld scanners, which relied on careful movement on the part of the user, became very popular among computer users, selling hundreds of thousands of units per year. These products, popular with desktop publishing, further gained usage after the TWAIN standard helped bring a sense of consistency to the scanner market. Eventually, flatbed scanners—which offer better quality and more consistency—took over the market.