Screenshot elements from PornStarByFace / Left: Lara Croft from Tomb Raider, right: porn performer Lavish Styles / Image: Samantha Cole

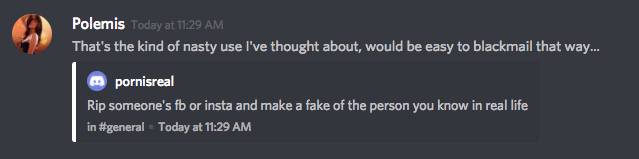

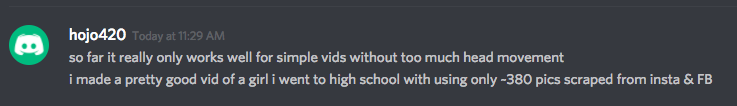

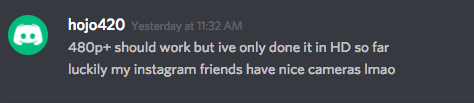

Earlier this week, we reported on a subreddit called "deepfakes," a growing community of redditors who create fake porn videos of celebrities using existing video footage and a machine learning algorithm. This algorithm is able to take the face of a celebrity from a publicly available video and seamlessly paste it onto the body of a porn performer. Often, the resulting videos are nearly indecipherable from reality. It’s done through a free, user-friendly app called FakeApp.One of the worst-case uses of this technology raised by computer scientists and ethicists I talked to is already happening. People are talking about, and in some cases actively using, this app to create fake porn videos of people they know in real life—friends, casual acquaintances, exes, classmates—without their permission.Some users in a deepfakes Discord chatroom where enthusiasts were trading tips claimed to be actively creating videos of people they know: women they went to high school with, for example. One user said that they made a “pretty good” video of a girl they went to high school with, using around 380 pictures scraped from her Instagram and Facebook accounts.

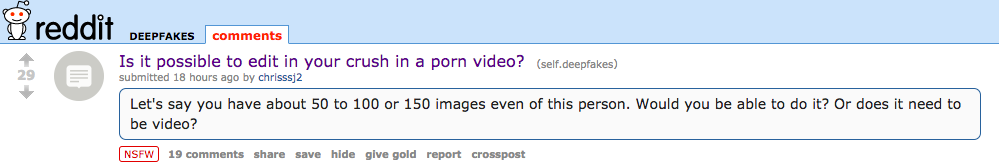

Even more people on Reddit and Discord are thinking aloud about the possibilities of creating fake porn videos of their crushes or exes, and asking for advice on the best ways to do it:

Even more people on Reddit and Discord are thinking aloud about the possibilities of creating fake porn videos of their crushes or exes, and asking for advice on the best ways to do it:

I’ve spent much of the last week monitoring these communities, where certain practices are already in widespread use among the tens of thousands of people subscribed to deepfake groups. The practices are an outgrowth of behavior that has long been practiced on Reddit and other online communities that has gone largely unpoliced by platforms. Our hope is that in demystifying the communities of people doing this, victims of nonconsensual, AI-generated porn will be able to understand and articulate the tactics used against them—and that the platforms people entrust with their personal data get better at protecting their users, or offer more clear privacy options for users who don't know about them.Update: After we published this story, Discord shut down the chatroom where people were discussing the creation of deepfake porn videos. "Non-consensual pornography warrants an instant shut down on the servers whenever we identify it, as well as permanent ban on the users," a Discord representative told Business Insider. "We have investigated these servers and shut them down immediately."Open-source tools like Instagram Scraper and the Chrome extension DownAlbum make it easy to pull photos from publicly available Facebook or Instagram accounts and download them all onto your hard drive. These tools have legitimate, non-creepy purposes. For example, allowing anyone to easily download any image ever posted to Motherboard's Instagram account and archive it means there's a higher likelihood that those images will never completely disappear from the web. Deepfake video makers, however, can use these social media scrapers to easily create the datasets they need to make fake porn featuring unsuspecting individuals they know in real life.Once deepfake makers have enough images of a face to work with, they need to find the right body of a porn performer to put it on. Not every face fits perfectly on every body because of differences in face dimensions, hair, and other variables. The better the match, the more convincing the final result. This part of the deepfake making process has also been semi-automated and streamlined.To find the perfect match, deepfakers are using browser-based applications that claim to employ facial recognition software to find porn performers who look like the person they want to make face-swap porn of. Porn World Doppelganger, Porn Star By Face, and FindPornFace are three easy-to-use web-based tools that say they use facial recognition technology to find a porn lookalike. None of the tools appear to be highly sophisticated, but they come close enough to help deepfakers find the best porn star for creating deepfakes. A user uploads a photo of the person they want to create a fake porn of, the site spits out an adult performer they could use, and then the user can go searching for that performer's videos on sites like Pornhub to create a deepfake.At this time, Motherboard doesn't know exactly how these websites work, beyond their claims to be using “facial recognition” technology. We contacted the creators of Porn World Doppelganger and Porn Star By Face, and will update when we hear back. Porn Star By Face says it has 2,347 performers in its database, pulled from the top videos on Pornhub and YouPorn. We don’t know whether the website sought these performers’ permission before adding them to this database.A spokesperson for FindPornFace told me in a Reddit message that their app is “quite useless” for using in conjunction with FakeApp to make videos, “cause [sic] this app needs not just similar face by eyes, nose and so on, they need similar shape of the head, jaw, skin color and so on,” they said. “It is different task. But yes, some of babes from search by photo results can be used to find a star for creating fake video. It is true.”The Porn Star By Face website says it's working on improving the algorithm it uses: “The neural network is trained with every request you make, so please share the link with your friends. We strive to provide a high level of accuracy. We use several photos from different angles to create a template of an actress. After creating a template we test and correct errors.”FindPornFace comes with a disclaimer: “By using our service, you agree to not upload any content depicting any person under the age of 21 and/or without that person's explicit permission.” Porn World Doggelganger’s site implies users are meant to upload photos of themselves, not someone else’s. But it also presents several quick-link options for searching celebrity lookalikes, including Selena Gomez, Nicki Minaj, Kylie Jenner, and Courtney Cox.Tools like FindPornFace are just automating part of a process communities on Reddit, 4chan and notorious revenge porn sites such as Anon-IB have been practicing manually for years: finding doppelganger porn based on photos uploaded to these sites (and, to some extent, finding “matching bodies” for Photoshopping faces of celebrities onto porn performers.)One of these communities, the “doppelbangher” subreddit, has nearly 36,000 subscribers (another, misspelled “dopplebangher” subreddit has 11,000). Many, if not most, of the posts in this subreddit are of people the requester knows: friends, classmates, moms of friends, casual acquaintances, crushes from afar. Users post photos to the subreddit (seemingly nearly always without consent) and other users comment with their best guesses at porn performers who look like the person in the photo. FindPornFace has a Reddit account that comments frequently with its results.The moderators of r/doppelbangher’s advise in the subreddit's rules that users should not post photos that can be traced back to social media accounts by uploading them to a hosting site like imgur first. Posting personal information, as well as revenge porn will result in a ban, they write. “Please be aware of how someone might feel about finding their picture here.”I've seen redditors make requests for “friend’s stepmom,” “coworker of mine,” “college friend,” “a friend of mine and my crush,” and “hottest girl in engineering,” paired with photos of them, taken from social media.If the owner of the photo finds it, they can request to have it taken down. But with the aforementioned rules about untraceable photo posting, how would someone find themselves in this subreddit without a daily scan for their own face?All of this exists in a privacy law gray area, as Wired explained in its story about the legality of deepfakes. Celebrities could sue for misappropriation of their images. The rest of us have little hope of legal recourse. Revenge porn law doesn’t include language about false images like a mashup of your face on another body. Much of the responsibility to prevent this falls on platforms themselves, and policies like anti-defamation statutes to catch up to the technology.“Creating fake sex scenes of celebrities takes away their consent. It’s wrong,” a porn performer told me in December, when we first covered deepfakes. Much of the attitude concerning doppelgangers and image manipulation is rooted in seeing celebrities—especially female celebrities—and porn performers as commodities, not “real” people. It’s a bias that shows up occasionally when the deepfake community discusses public figures as opposed to, say, their next door neighbors or children.We’ve contacted Reddit and the moderators of these communities, and will update when we hear back.

I’ve spent much of the last week monitoring these communities, where certain practices are already in widespread use among the tens of thousands of people subscribed to deepfake groups. The practices are an outgrowth of behavior that has long been practiced on Reddit and other online communities that has gone largely unpoliced by platforms. Our hope is that in demystifying the communities of people doing this, victims of nonconsensual, AI-generated porn will be able to understand and articulate the tactics used against them—and that the platforms people entrust with their personal data get better at protecting their users, or offer more clear privacy options for users who don't know about them.Update: After we published this story, Discord shut down the chatroom where people were discussing the creation of deepfake porn videos. "Non-consensual pornography warrants an instant shut down on the servers whenever we identify it, as well as permanent ban on the users," a Discord representative told Business Insider. "We have investigated these servers and shut them down immediately."Open-source tools like Instagram Scraper and the Chrome extension DownAlbum make it easy to pull photos from publicly available Facebook or Instagram accounts and download them all onto your hard drive. These tools have legitimate, non-creepy purposes. For example, allowing anyone to easily download any image ever posted to Motherboard's Instagram account and archive it means there's a higher likelihood that those images will never completely disappear from the web. Deepfake video makers, however, can use these social media scrapers to easily create the datasets they need to make fake porn featuring unsuspecting individuals they know in real life.Once deepfake makers have enough images of a face to work with, they need to find the right body of a porn performer to put it on. Not every face fits perfectly on every body because of differences in face dimensions, hair, and other variables. The better the match, the more convincing the final result. This part of the deepfake making process has also been semi-automated and streamlined.To find the perfect match, deepfakers are using browser-based applications that claim to employ facial recognition software to find porn performers who look like the person they want to make face-swap porn of. Porn World Doppelganger, Porn Star By Face, and FindPornFace are three easy-to-use web-based tools that say they use facial recognition technology to find a porn lookalike. None of the tools appear to be highly sophisticated, but they come close enough to help deepfakers find the best porn star for creating deepfakes. A user uploads a photo of the person they want to create a fake porn of, the site spits out an adult performer they could use, and then the user can go searching for that performer's videos on sites like Pornhub to create a deepfake.At this time, Motherboard doesn't know exactly how these websites work, beyond their claims to be using “facial recognition” technology. We contacted the creators of Porn World Doppelganger and Porn Star By Face, and will update when we hear back. Porn Star By Face says it has 2,347 performers in its database, pulled from the top videos on Pornhub and YouPorn. We don’t know whether the website sought these performers’ permission before adding them to this database.A spokesperson for FindPornFace told me in a Reddit message that their app is “quite useless” for using in conjunction with FakeApp to make videos, “cause [sic] this app needs not just similar face by eyes, nose and so on, they need similar shape of the head, jaw, skin color and so on,” they said. “It is different task. But yes, some of babes from search by photo results can be used to find a star for creating fake video. It is true.”The Porn Star By Face website says it's working on improving the algorithm it uses: “The neural network is trained with every request you make, so please share the link with your friends. We strive to provide a high level of accuracy. We use several photos from different angles to create a template of an actress. After creating a template we test and correct errors.”FindPornFace comes with a disclaimer: “By using our service, you agree to not upload any content depicting any person under the age of 21 and/or without that person's explicit permission.” Porn World Doggelganger’s site implies users are meant to upload photos of themselves, not someone else’s. But it also presents several quick-link options for searching celebrity lookalikes, including Selena Gomez, Nicki Minaj, Kylie Jenner, and Courtney Cox.Tools like FindPornFace are just automating part of a process communities on Reddit, 4chan and notorious revenge porn sites such as Anon-IB have been practicing manually for years: finding doppelganger porn based on photos uploaded to these sites (and, to some extent, finding “matching bodies” for Photoshopping faces of celebrities onto porn performers.)One of these communities, the “doppelbangher” subreddit, has nearly 36,000 subscribers (another, misspelled “dopplebangher” subreddit has 11,000). Many, if not most, of the posts in this subreddit are of people the requester knows: friends, classmates, moms of friends, casual acquaintances, crushes from afar. Users post photos to the subreddit (seemingly nearly always without consent) and other users comment with their best guesses at porn performers who look like the person in the photo. FindPornFace has a Reddit account that comments frequently with its results.The moderators of r/doppelbangher’s advise in the subreddit's rules that users should not post photos that can be traced back to social media accounts by uploading them to a hosting site like imgur first. Posting personal information, as well as revenge porn will result in a ban, they write. “Please be aware of how someone might feel about finding their picture here.”I've seen redditors make requests for “friend’s stepmom,” “coworker of mine,” “college friend,” “a friend of mine and my crush,” and “hottest girl in engineering,” paired with photos of them, taken from social media.If the owner of the photo finds it, they can request to have it taken down. But with the aforementioned rules about untraceable photo posting, how would someone find themselves in this subreddit without a daily scan for their own face?All of this exists in a privacy law gray area, as Wired explained in its story about the legality of deepfakes. Celebrities could sue for misappropriation of their images. The rest of us have little hope of legal recourse. Revenge porn law doesn’t include language about false images like a mashup of your face on another body. Much of the responsibility to prevent this falls on platforms themselves, and policies like anti-defamation statutes to catch up to the technology.“Creating fake sex scenes of celebrities takes away their consent. It’s wrong,” a porn performer told me in December, when we first covered deepfakes. Much of the attitude concerning doppelgangers and image manipulation is rooted in seeing celebrities—especially female celebrities—and porn performers as commodities, not “real” people. It’s a bias that shows up occasionally when the deepfake community discusses public figures as opposed to, say, their next door neighbors or children.We’ve contacted Reddit and the moderators of these communities, and will update when we hear back.

Advertisement

Advertisement

Advertisement

Advertisement

Advertisement