Twitter users reported 3 million accounts for hateful content in the first half of 2018. Ten percent resulted in Twitter taking action. Image: Shutterstock

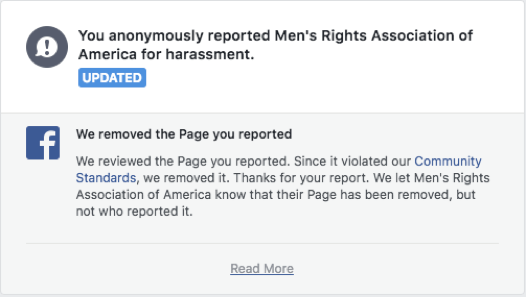

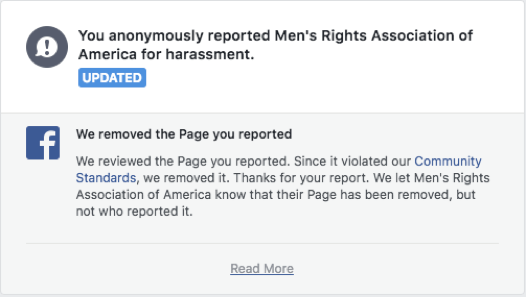

After only a year of the #MeToo movement going viral, I was already jaded from bad news and men still not “getting it.” By the time the Brett Kavanaugh hearings came up, I assumed everyone was feeling just as exhausted—until one of my friends told me she had channelled her rage into social media vigilantism.My friend had successfully reported hateful content online, including a misogynistic men’s rights group on Facebook. In less than a day, Facebook had either taken down the groups she reported, or taken down specific posts on the pages. As users call on social media companies to do a better job at moderation, it turns out there’s something more tangible they can do: report the content first.“When you encounter groups that you find to be in violation of platform policy or that are very toxic, it is useful for you to report them,” Kat Lo, an online community researcher at the University of California Irvine, told me in an email. “There is an uncomfortable relationship if you’re trusting that companies are just trawling and reading everybody’s social interactions, so a lot of them try to make it report-based.”Of course, the real onus of making the internet safer is still on social media platforms to actually enforce their terms of service and to continue improving their own content moderation methods. Facebook tries to make sure its billions of users are complying with its community standards, including a section on hate speech that specifically notes that “dehumanizing speech” is not allowed. Twitter’s rules and policies lay out a specific “Hateful conduct policy” that prohibits “inciting fear” and “hateful imagery,” among other harmful posts.And while companies do have to operate within accordances of the laws of different countries, such as the European Union’s General Data Protection Regulation, most platforms aren’t jumping at the chance to be regulated.Facebook, Twitter, and other major platforms also use both AI and human moderators to police content, but Lo warns that algorithms lack context. “The context of a post very much determines how you treat individual statements. There is no feasible way for us to computationally determine context and intentionality,” she said.Evan Balgord, the executive director of the Canadian Anti-Hate Network, explained that reporting is especially helpful to draw attention to phrases that moderators might not know or AI systems can’t catch, such as when hate groups use coded language (academics call this “dog whistling”) that only certain groups are meant to understand. For example, white supremacists use “Day of the Rope” when referring to lynching “race traitors.” I looked up this death threat term on Twitter, and immediately found suspicious activity.

As users call on social media companies to do a better job at moderation, it turns out there’s something more tangible they can do: report the content first.“When you encounter groups that you find to be in violation of platform policy or that are very toxic, it is useful for you to report them,” Kat Lo, an online community researcher at the University of California Irvine, told me in an email. “There is an uncomfortable relationship if you’re trusting that companies are just trawling and reading everybody’s social interactions, so a lot of them try to make it report-based.”Of course, the real onus of making the internet safer is still on social media platforms to actually enforce their terms of service and to continue improving their own content moderation methods. Facebook tries to make sure its billions of users are complying with its community standards, including a section on hate speech that specifically notes that “dehumanizing speech” is not allowed. Twitter’s rules and policies lay out a specific “Hateful conduct policy” that prohibits “inciting fear” and “hateful imagery,” among other harmful posts.And while companies do have to operate within accordances of the laws of different countries, such as the European Union’s General Data Protection Regulation, most platforms aren’t jumping at the chance to be regulated.Facebook, Twitter, and other major platforms also use both AI and human moderators to police content, but Lo warns that algorithms lack context. “The context of a post very much determines how you treat individual statements. There is no feasible way for us to computationally determine context and intentionality,” she said.Evan Balgord, the executive director of the Canadian Anti-Hate Network, explained that reporting is especially helpful to draw attention to phrases that moderators might not know or AI systems can’t catch, such as when hate groups use coded language (academics call this “dog whistling”) that only certain groups are meant to understand. For example, white supremacists use “Day of the Rope” when referring to lynching “race traitors.” I looked up this death threat term on Twitter, and immediately found suspicious activity.

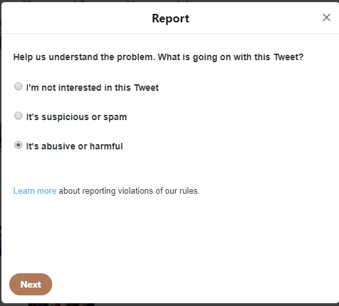

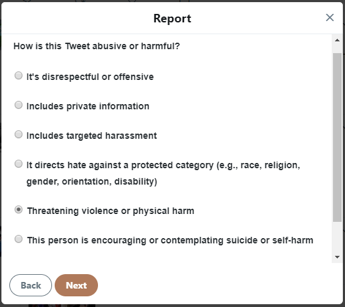

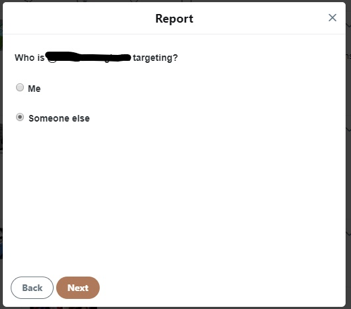

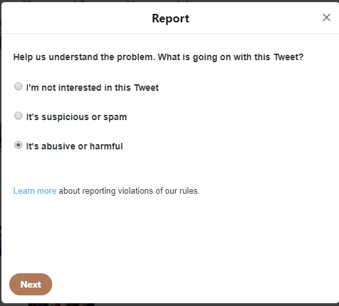

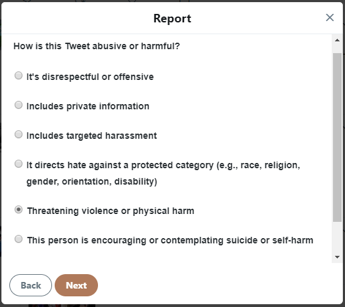

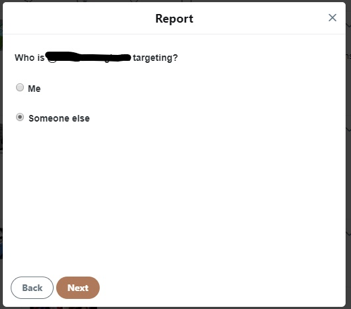

After flagging a Day of the Rope tweet, Twitter gave the option to add four additional tweets from the offending account. When a post is offensive but not harmful, it’s often difficult to find more explicitly troubling content. This time, I found five immediately.Users who report content do the important job of “protect[ing] other bystanders from potentially experiencing harm,” said Kumar.It’s not up to users to fix social media’s trouble with hateful content, and for the sake of your sanity, it’s not recommended to go out of your way to look for it.After all, moderation is a full-time job. Additionally, because hateful content is so pervasive, increased bystander reporting is unlikely to shift the onus of moderation from platforms to their users. Instead, Lo said, it helps “social media companies identify where their systems are failing” in spotting hate groups.Plus, it is one of the few avenues available, now that going through the courts is harder than ever. Richard Warman, an Ottawa human rights lawyer and board member of the Canadian Anti-Hate Network, was an early practitioner of reporting content that violated the law. Prior to 2013, section 13 of Canada’s human rights act essentially prohibited people from using telephones and the internet to spread hate propaganda against individuals or groups.Warman filed 16 human rights complaints against hate groups through section 13. All 16 cases were successful.In 2013, Canada’s minority Conservative government repealed section 13 under the argument that it was “an infringement on freedom of expression.” Today, the only way to prosecute hate speech in Canada is through the Criminal Code. The code makes advocating genocide and public incitement of hatred towards “identifiable groups illegal on paper.” Unfortunately, Warman says it is far more difficult to file complaints through the Criminal Code due to several factors—such as the lack of dedicated police hate crimes units.Since the repeal of section 13, Warman says the lack of human rights legislation has allowed for the internet to become a “sewer.” “It permits people to open the tops of their skulls and just let the demons out in terms of hate propaganda,” he said.In the United States, prosecuting hate speech is near impossible. The Supreme Court has reiterated that the First Amendment protects hate speech, most recently in 2017 through the case Matal vs. Tam. Additionally, hate crime statutes vary across states.The terrifying repercussions of online hate have become undeniably clear—from the van attack in Toronto linked to 4chan, to the time propaganda on Facebook helped lead to the Rohingya genocide, the discussion over content moderation has become about preventing openly harmful speech, not defending free speech.So how can we combat such a mess? Even if reporting doesn’t result in platforms taking down content, Warman points out it creates a historical record that can help keep companies accountable should something more sinister happen as a result of the posts.Kumar offered further reassurance. “For most of their existence, social media platforms have maintained a convenient ‘hands-off approach’ to content moderation—out of sight, out of mind,” she said. “We saw in 2018 that there are greater calls for consultations between social media industry leaders, governments, policymakers, and internet researchers.”In 2018, then Canadian justice minister Jody Wilson-Raybould (now Minister of Veteran Affairs) hinted that the Liberal government may revisit section 13. Twitter addressed promoting “healthy conversation”. Mark Zuckerberg promised Facebook would be more proactive about identifying harmful content and about working with governments on regulations. In 2019, Facebook says it will even offer appeals for content its moderators have deemed not in violation of its policies.You don’t have to be a dedicated internet vigilante to do your part. Go report that awful content you come across instead of just scrolling past. Or if you see someone you know on a personal level sharing hate propaganda, Warman points out that you can always take the old-school method of standing up for people. “Just call them out and say, ‘Stop being an asshole.’”Listen to CYBER , Motherboard’s new weekly podcast about hacking and cybersecurity.

After flagging a Day of the Rope tweet, Twitter gave the option to add four additional tweets from the offending account. When a post is offensive but not harmful, it’s often difficult to find more explicitly troubling content. This time, I found five immediately.Users who report content do the important job of “protect[ing] other bystanders from potentially experiencing harm,” said Kumar.It’s not up to users to fix social media’s trouble with hateful content, and for the sake of your sanity, it’s not recommended to go out of your way to look for it.After all, moderation is a full-time job. Additionally, because hateful content is so pervasive, increased bystander reporting is unlikely to shift the onus of moderation from platforms to their users. Instead, Lo said, it helps “social media companies identify where their systems are failing” in spotting hate groups.Plus, it is one of the few avenues available, now that going through the courts is harder than ever. Richard Warman, an Ottawa human rights lawyer and board member of the Canadian Anti-Hate Network, was an early practitioner of reporting content that violated the law. Prior to 2013, section 13 of Canada’s human rights act essentially prohibited people from using telephones and the internet to spread hate propaganda against individuals or groups.Warman filed 16 human rights complaints against hate groups through section 13. All 16 cases were successful.In 2013, Canada’s minority Conservative government repealed section 13 under the argument that it was “an infringement on freedom of expression.” Today, the only way to prosecute hate speech in Canada is through the Criminal Code. The code makes advocating genocide and public incitement of hatred towards “identifiable groups illegal on paper.” Unfortunately, Warman says it is far more difficult to file complaints through the Criminal Code due to several factors—such as the lack of dedicated police hate crimes units.Since the repeal of section 13, Warman says the lack of human rights legislation has allowed for the internet to become a “sewer.” “It permits people to open the tops of their skulls and just let the demons out in terms of hate propaganda,” he said.In the United States, prosecuting hate speech is near impossible. The Supreme Court has reiterated that the First Amendment protects hate speech, most recently in 2017 through the case Matal vs. Tam. Additionally, hate crime statutes vary across states.The terrifying repercussions of online hate have become undeniably clear—from the van attack in Toronto linked to 4chan, to the time propaganda on Facebook helped lead to the Rohingya genocide, the discussion over content moderation has become about preventing openly harmful speech, not defending free speech.So how can we combat such a mess? Even if reporting doesn’t result in platforms taking down content, Warman points out it creates a historical record that can help keep companies accountable should something more sinister happen as a result of the posts.Kumar offered further reassurance. “For most of their existence, social media platforms have maintained a convenient ‘hands-off approach’ to content moderation—out of sight, out of mind,” she said. “We saw in 2018 that there are greater calls for consultations between social media industry leaders, governments, policymakers, and internet researchers.”In 2018, then Canadian justice minister Jody Wilson-Raybould (now Minister of Veteran Affairs) hinted that the Liberal government may revisit section 13. Twitter addressed promoting “healthy conversation”. Mark Zuckerberg promised Facebook would be more proactive about identifying harmful content and about working with governments on regulations. In 2019, Facebook says it will even offer appeals for content its moderators have deemed not in violation of its policies.You don’t have to be a dedicated internet vigilante to do your part. Go report that awful content you come across instead of just scrolling past. Or if you see someone you know on a personal level sharing hate propaganda, Warman points out that you can always take the old-school method of standing up for people. “Just call them out and say, ‘Stop being an asshole.’”Listen to CYBER , Motherboard’s new weekly podcast about hacking and cybersecurity.

Advertisement

Advertisement

Human moderators can struggle with understanding the full context of posts too because they are asked to moderate a high volume of posts, and sometimes have to moderate posts in countries or languages they don’t know very well. Working with “highly toxic” content has even led one moderator to sue Facebook because she believes the job gave her PTSD.But users can take action, too. “Online, we don’t have that face-to face connection so we feel more anonymous and we have a certain level of disinhibition that would allow us to communicate differently,” said Priya Kumar, a research fellow at the Social Media Lab at Ryerson University in Toronto.Sometimes the lack of restraint is toxic. “This is where you see a rise of threats, aggressive behavior, or of instability,” said Kumar. But it doesn’t have to be. The disinhibition anonymity creates can also make reporting dangerous content easier.Users reported 2,698,613 accounts for hateful conduct on Twitter from January to June 2018, and 285,393 resulted in Twitter taking action against them—that is, a little more than 10 percent of reports. Facebook says it reviews more than 2 million pieces of content a day and aim to have a response within 24 hours, depending on how clear-cut the case is.Facebook actually considers user reports an important part of moderation, a Facebook spokesperson told Motherboard over email. “Something like ‘you're crazy’ may be an inside joke between friends, but it could also be an insult exchanged between people who have a history with one another. We aren't privy to that offline context, which is why user reports are really important,” they said.Read More: The Impossible Job: Inside Facebook’s Struggle to Moderate Two Billion People

Advertisement

Twitter requires the user violating its code of conduct to delete the offending tweet before allowing them to tweet again, rather than just taking it down. In an October 2018 blog post, Twitter explained, “Once we've required a Tweet to be deleted, we will display a notice stating that the Tweet is unavailable because it violated the Twitter Rules.”When I checked back two weeks later, the Twitter account was still up, but the tweets I reported were no longer available. It was a small action, to be sure, but by reporting something terrible, others didn’t have to see it come up across their feed.“Just call them out and say, ‘Stop being an asshole’”

Advertisement

Advertisement