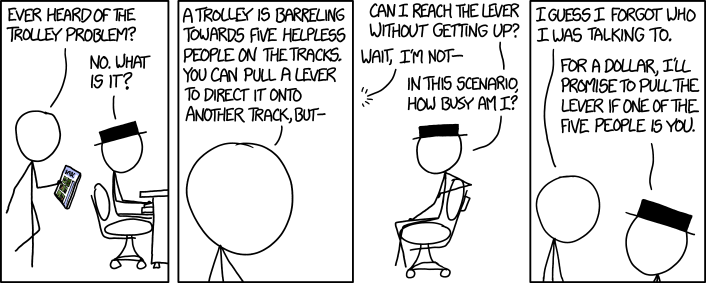

One of the key principles in computability theory—and computing generally—will prevent artificial intelligence programs from correctly determining whether or not to kill. This fundamental barrier is the famous Halting problem, according to a recent paper by the ethicist Matthias Englert and a group of thinkers based at the Darmstadt University of Technology in Germany.The general idea under consideration is whether or not a robot can make an ethical determination. How capable is an algorithm of sorting right from wrong?We might program a machine with some deep array of values and principles, hard-wire the thing with the Geneva Conventions, yet, based on the "undecidability of the Halting problem," it can't be counted on to make a correct decision. This is what Englert and co. set out to prove.The ethicists describe four different scenarios, falling into the categories, "lesser of two evils," "lack of predetermination," "insufficient information," and, finally, computability. The last one gets the most attention and would seem to have the deepest implications for the overall question just by addressing the best-case circumstance where the thing has been programmed as perfectly as possible. Here's where we figure out what "possible" might really mean.The question, which the team admits is more of a thought-experiment (gedankenexperiment) than anything super-rigorous or technical, is posed via the "trolley problem," a famous hypothetical scenario used in psychology, cognitive science, and, yes, computer science. You can direct the runaway trolley onto another track where it will kill just one person instead of the hapless group of innocents. So: do you save the five helpless people and kill the one or do you let the many die by not doing anything?The version of the trolley problem addressing computability imagines that instead of a human being standing at the switch making the decision it's a computer program or algorithm.Here is the whole damn thing:On the occasion of repairing the rusted switch, also a fully-automated lever frame is to be installed in the switch tower. However the engineer who created the new device happens to be the (ostensibly repenting) villainess. You are thus suspicious of whether to trust the software she included in the control: It might on some occasion deliberately direct an approaching trolley onto a track closed for renovation by the workers. On the other hand she does deliver the unit in person and provides free access to its source code.a) Still suspicious, you detain her until having hand-checked the code according to whether it indeed avoids in all cases (i.e. on all inputs) any switch setting that would direct a train to a reserved track.b) Similarly to (a), but now your job is replaced by a robot: a highly efficient computer controlled autonomous agent supposed to decide whether (and for how long) to arrest the engineer.c) Similarly to (b), but now the suspect in addition promises her software to run in linear time.Let moral behaviour (of you or the robot) mean the following: If the programmer has devised a fully functional control, she eventually must be released and allowed to install the device; otherwise, namely in case the code is malicious, its creator must remain in custody.The conclusion reached by Engler et al is that the algorithm can't figure out a moral solution. Can the "bad" person create a "good" thing? Can that be determined?The Halting problem says no, according to the ethicists. Said problem, another foundational computing concept courtesy of Alan Turing, addresses the general question of whether or not a given computer program will, given some input data, terminate or continue running forever.The actual problem arises when we ask if it's possible to make an algorithm or make a computer program that's capable of the above determination. So: an algorithm to evaluate whether another algorithm will halt or loop forever, or will kill the one or let the five die. That evaluation algorithm is impossible, which is the Halting problem itself, a conclusion that, while proved pretty simply, is probably worth its own post.Here the algorithm checking algorithm is applied to the villianess' switch program, to ill results. The second algorithm can't correctly predict all of the behaviors of the first; only the first can do that. If we look at the two decisions of the switch algorithm, five innocents vs. one goofball, as halting (innocents) vs. continueing (goofball), an algorithm can't determine whether the switch wll make the correct moral choice every time. It's a problem of infinity: to know a problem (a general program) does not halt, you would have to observe it for an infinite-plus-one period of time.What Engler is mostly saying, however, is that algorithms do unexpected things; software has bugs. Sometimes code is only executed in special circumstances. Etc. An algorithm programmed to do the right thing might do the wrong thing.It's not actually all that satisfying, but offers one recommendation that could be worthwhile: a developer of a certain moral machine should be required to provide a way of verifying the machine's correctness. The Halting problem doesn't say that programs can't be proved to do the right thing, just that general programs can't predict everything about the behavior of another general program.And computer scientists indeed toil over correctness, so take heart.

You can direct the runaway trolley onto another track where it will kill just one person instead of the hapless group of innocents. So: do you save the five helpless people and kill the one or do you let the many die by not doing anything?The version of the trolley problem addressing computability imagines that instead of a human being standing at the switch making the decision it's a computer program or algorithm.Here is the whole damn thing:On the occasion of repairing the rusted switch, also a fully-automated lever frame is to be installed in the switch tower. However the engineer who created the new device happens to be the (ostensibly repenting) villainess. You are thus suspicious of whether to trust the software she included in the control: It might on some occasion deliberately direct an approaching trolley onto a track closed for renovation by the workers. On the other hand she does deliver the unit in person and provides free access to its source code.a) Still suspicious, you detain her until having hand-checked the code according to whether it indeed avoids in all cases (i.e. on all inputs) any switch setting that would direct a train to a reserved track.b) Similarly to (a), but now your job is replaced by a robot: a highly efficient computer controlled autonomous agent supposed to decide whether (and for how long) to arrest the engineer.c) Similarly to (b), but now the suspect in addition promises her software to run in linear time.Let moral behaviour (of you or the robot) mean the following: If the programmer has devised a fully functional control, she eventually must be released and allowed to install the device; otherwise, namely in case the code is malicious, its creator must remain in custody.The conclusion reached by Engler et al is that the algorithm can't figure out a moral solution. Can the "bad" person create a "good" thing? Can that be determined?The Halting problem says no, according to the ethicists. Said problem, another foundational computing concept courtesy of Alan Turing, addresses the general question of whether or not a given computer program will, given some input data, terminate or continue running forever.The actual problem arises when we ask if it's possible to make an algorithm or make a computer program that's capable of the above determination. So: an algorithm to evaluate whether another algorithm will halt or loop forever, or will kill the one or let the five die. That evaluation algorithm is impossible, which is the Halting problem itself, a conclusion that, while proved pretty simply, is probably worth its own post.Here the algorithm checking algorithm is applied to the villianess' switch program, to ill results. The second algorithm can't correctly predict all of the behaviors of the first; only the first can do that. If we look at the two decisions of the switch algorithm, five innocents vs. one goofball, as halting (innocents) vs. continueing (goofball), an algorithm can't determine whether the switch wll make the correct moral choice every time. It's a problem of infinity: to know a problem (a general program) does not halt, you would have to observe it for an infinite-plus-one period of time.What Engler is mostly saying, however, is that algorithms do unexpected things; software has bugs. Sometimes code is only executed in special circumstances. Etc. An algorithm programmed to do the right thing might do the wrong thing.It's not actually all that satisfying, but offers one recommendation that could be worthwhile: a developer of a certain moral machine should be required to provide a way of verifying the machine's correctness. The Halting problem doesn't say that programs can't be proved to do the right thing, just that general programs can't predict everything about the behavior of another general program.And computer scientists indeed toil over correctness, so take heart.

Advertisement

Advertisement

Advertisement